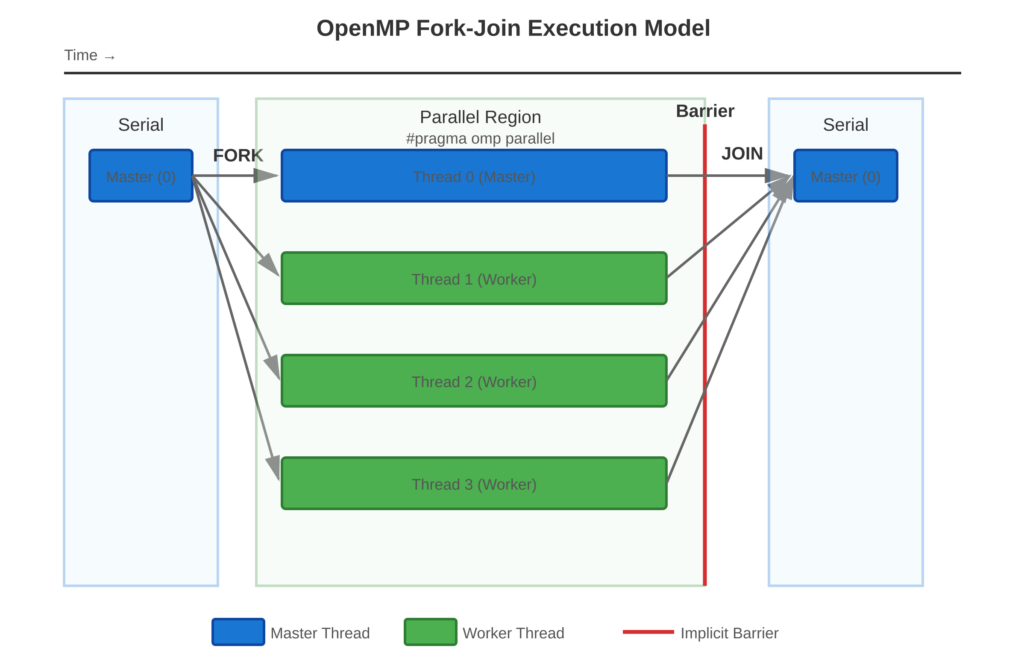

OpenMP uses a fork-join model. In this model, the main thread creates (forks) many threads to do work together. After all the threads finish their job, the main thread waits for them before moving on (joins). This model helps make parallel programming clear and easy. Understanding this basic idea is important for knowing how OpenMP programs work.

Refer to following diagram for fork-join model visualization.

Core Concept

An OpenMP program starts with one main thread that runs step by step. When it sees a special command (#pragma omp parallel), the main thread creates a team of new threads. Each thread executes the parallel region code independently. After all the work is done, the threads wait for each other at an invisible barrier to make sure everyone finishes. Then they join back together, and only the main thread continues with the rest of the program.

Key Points

- Master Thread: Thread 0, always present throughout program execution

- Fork: Creating additional threads at parallel region entry

- Thread Team: Group of threads (including master) executing parallel work

- Join: All threads synchronize and terminate except master

- Implicit Barrier: Automatic synchronization at parallel region exit

- Nested Regions: Programs alternate between serial and parallel execution

Visual Aid: The accompanying diagram.svg illustrates the fork-join lifecycle with timeline visualization

Code Example

Scenario: Program demonstrating multiple fork-join cycles with sequential sections

OpenMP Implementation:

// Reference: examples/fork_join_demo.c

#include <stdio.h>

#include <omp.h>

int main() {

printf("=== Serial region (Master only) ===\n");

printf("Thread %d executing\n\n", omp_get_thread_num());

// First parallel region (Fork)

#pragma omp parallel

{

printf("[Region 1] Thread %d working\n", omp_get_thread_num());

}

// Implicit barrier and join happens here

printf("\n=== Serial region (Master only) ===\n");

printf("Thread %d back to serial\n\n", omp_get_thread_num());

// Second parallel region (Fork again)

#pragma omp parallel num_threads(2)

{

printf("[Region 2] Thread %d working\n", omp_get_thread_num());

}

// Join again

printf("\n=== Serial region (Master only) ===\n");

printf("Thread %d finished\n", omp_get_thread_num());

return 0;

}Expected Output:

=== Serial region (Master only) ===

Thread 0 executing

[Region 1] Thread 0 working

[Region 1] Thread 2 working

[Region 1] Thread 1 working

[Region 1] Thread 3 working

=== Serial region (Master only) ===

Thread 0 back to serial

[Region 2] Thread 0 working

[Region 2] Thread 1 working

=== Serial region (Master only) ===

Thread 0 finishedUsage & Best Practices

When to Use

- Identifying computation-intensive code sections for parallelization

- Structuring parallel algorithms with clear synchronization points

- Debugging parallel execution by understanding thread lifecycles

Best Practices

- Keep parallel regions computationally significant (avoid fine-grained parallelism)

- Understand that implicit barriers incur synchronization overhead

- Use the fork-join model to reason about data visibility and race conditions

- Place parallel regions around computational hotspots identified through profiling

Common Mistakes

- Excessive fork-join overhead from too many small parallel regions

Key Takeaways

Summary:

- OpenMP uses fork-join model: master thread forks workers, then joins

- Parallel regions create thread teams; implicit barriers synchronize at exit

- Programs alternate between serial (master only) and parallel (all threads) execution

- Understanding this model is crucial for correctness and performance

Quick Reference

Fork-Join Lifecycle:

Serial → Fork → Parallel Work → Join → Serial

(M) (M+W) (All threads) (M+W) (M)

M = Master, W = Workers